Quality attributes and constraints

Unfortunately, there is no escape from making trade-offs (not even in a parallel universe)

We want more quality (almost always)

The word “quality” is not mentioned in the Universal Declaration of Human Rights, but it is not hard to see why increasing quality makes life generally better.

As consumers, we want our food to be fresh, nutritious and tasty. The cars we drive should be reliable, safe and comfortable. We want the new coffee machine to last long, be easy to use and grind quietly.

As workers, we take pride in producing quality results. When the things we create do what they are supposed to do and our bosses and clients are happy, we gain satisfaction.

There are also downsides to quality, though. Quality products take more time and effort to produce, have a higher risk of economic failure (which can motivate producers to cheat or take shortcuts). A high quality goal may not be achievable or realistic, which can be frustrating.

Still, although quality has a cost, neglecting to consider quality requirements will, in most cases, have negative long-term consequences.

I already mentioned some quality attributes. Fresh, reliable, safe, quiet and so on. The list is endless. The Wikipedia article about software quality attributes1 mentions only "notable attributes" and still arrives at over 50 entries.

Picking desirable attributes is easy. The more the better one may think. The problem is that every attribute has an opposing counter-part. Optimizing one attribute always means clashing with another one. If we equally value two opposing attributes, we are in a predicament. Which leads to the unfortunate fact of life that there is no escape from having to make trade-offs.

Why are there always trade-offs?

Have you wondered why that is, actually? Why is there not one quality aspect that we can optimize without any downsides?

There are two reasons:

Resources are limited

Inherent logical constraints

Resources are limited

The most fundamental resources are time and money. If you want to develop lots of features: it will take more time (and/or more money). If you want something developed faster: it will cost more (and/or take longer). Scope, time and cost create a *triangle* that bounds every project we undertake. In this triangle, we can optimize two but never all three attributes:

More features & faster => that costs more

Cheaper & faster => we have to drop features

Cheaper & more features => that requires more time

We can not escape this limitation.

What if we lived in a parallel universe?

Let's imagine a world where automation can produce anything instantly. Then we can keep making things cheaper while continue to add more features (this is what, to a certain degree, progress of the modern world is all about).

If we can in addition imagine that in this world resources are also unlimited and free to produce: then yes, we can simply add more and more features without requiring more time or more money.

Yet, even in this imaginary fantastic world, we would still not be free of having to make trade-offs.

Logical constraints

Just because we can produce anything immediately and at no cost, we are still bound by this other, nasty unescapable condition called complexity.

Let's say you want simplicity and flexibility at the same time. You want your coffee machine to be as easy to use as possible. So you give it exactly one button. Put your mug, press the button. That's it. However, if you also want to be able to configure your coffee to your many tastes (foam-strength, roast intensity, amount of water), that's not going to work with just one button. You will need many buttons (or other types of widgets).

What about if in the future we have computer brain interfaces, where I can just think of a "cappuccino, extra hot, half milk, strong roast"? I wouldn't need any dial at all and yet can configure whatever coffee I can imagine. Have we escaped the trade-off?

No. All we have done is move the problem from the interface of the coffee machine to the interface of your computer-brain-chip. Instead of your fingers, you will use your brain waves to interact with the machine. Now you want a chip that works for all "brain types". How many nerves contacts does the chip require? Simple to implant versus high "brain compatibility" (you may be surprised, but I am actually not a brain surgeon, so I am entirely making things up here).

The thing is: complexity can only be shifted, it can't be reduced2.

Similarly to the limitation of resources, there is a boundary of complexity that we can't escape.

We also have complexity constraint triangles. Consider a classic software architecture triangle3.

You want high cohesion with low coupling - every function does one thing, there are little to no dependencies - but now you need to duplicate functionality across your system so you wont be able to achieve clean modularity.

Similar is true for high modularity with low coupling - clean modules with little dependency on each other - now your modules need to have multiple responsibilities.

High modularity with high cohesion - cleanly separated modules, and they do one or only a few things well - now you will have lots of cross-references and dependencies between the modules, they will be tightly coupled.

Pick your attributes well

As we can see now, it is important to choose your quality goals well. Otherwise, you may have to explain to your client that they can't have it both ways. This should be the first thing to figure out with stakeholders and everyone interested in what you want to design and deliver: what are the trade-offs to make and where do we plant our goal post between competing quality requirements?

We already said there are many attributes to choose from resulting in all sorts of trade-offs. Where do we start? That's what quality frameworks are for.

There are many to choose from (ISO-25010 etc). I want to introduce briefly the Arc42 Quality Model because it is nicely condensed and intuitive. Instead of considering all possible attributes (which go into hundreds in some models), it lists eight key system properties that connect to everything else.

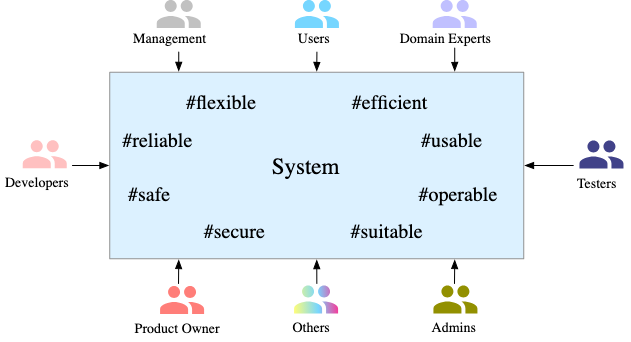

The properties are (diagram taken from their website):

I like to take these properties and think about priorities. The main goal of quality frameworks is to describe the complete landscape of all possible attributes, to ensure every angle of a system has been considered. But before thinking about completeness, we need to decide what to build first and what to focus on (especially in agile settings). This is useful for any project and can help steer the discussion between stakeholders and those who implement the solution.

If you could choose exactly one goal of any project, which one would that be? I think it is this: the most important objective is that we deliver what the client had asked us to build.

There is no point in shipping something highly performant if it doesn't solve the clients problem. If your employer asks you to build a shopping website, and you deliver a banking software, it simply does not matter that it is the fastest, most secure piece of software ever conceived.

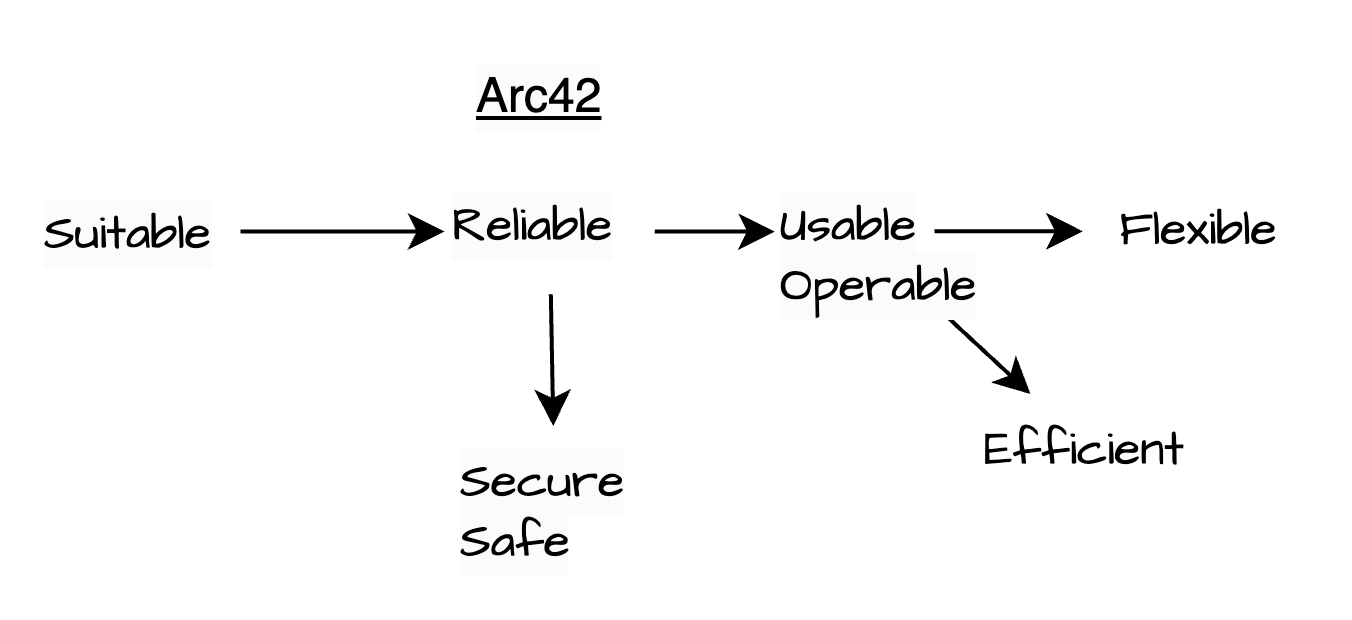

Arc42 calls this property suitable, and I think it should be the first thing to think about. Suitability is where function requirements meet non-functional quality attributes. As with every system property, suitable means something different depending on the role someone has. Suitable code for a developer means something entirely different than suitable application for a user. It depends what matters to the client, but in general, we need to first make sure we understand what is means to build the "right" solution.

Next, the solution we deliver should be reliable. Delivering exactly what the client needs is great, but it does not matter much if the product does not work reliably. As with every attribute, there is a range. Reliable can mean performance, up-time, correctness etc. In the beginning, it may be fine for the client if the solution does not work all the time, for example, in a proof-of-concept project. As long as everyone agrees on what the expectations are.

Two properties can be equally important to reliability. Those are secure and safe. They are often inherently important, but given their high value (or risk of damage) it is particularly important to specify them.

What is the difference? It is "safe to use" versus "secure from mis-use". If there is any potential personal harm for a user, safety is even more important than reliability. If all we deliver is a website that shows public data, it may be acceptable to deliver a website without any user authentication.

Next I see equally important properties of operable and usable. So far, we are building a product that does what it should, that is secure and safe, now we need to consider its operation and use. In terms of requirements, this is where MVPs often neglect operability and usability in favor of speed and cost. A product that solves an urgent need may not to be the most usable tool. It may also be difficult to operate or deploy, but as long as we are able to get it out there and receive feedback we can be fine with little automation and manual lifting.

As the remaining two properties deal more with future requirements, they have lower priority. After a successful launch and once our application has proven to create demand, we want it to be efficient. Running software consumes resources that if, not done efficiently, may cause the business to fail when costs outweigh profits (the fate of many cloud apps).

Finally, our solution is working in full swing and new requirements come in regularly. Hopefully we have built something that is flexible, our final attribute. How well can we extend or adjust the product and evolve over time?

If you consider those eight properties together, you cover a lot of ground.

The resulting map looks like this:

Another famous example: https://en.wikipedia.org/wiki/CAP_theorem